In this blog I am going to speak about backup and restore, recovery models within SQL Server, replication choices and then spent some time on log shipping (one of these days I will write a similar blog for Oracle/DB2 database). In this blog, I am going to just scratch the surface as far as Replication choices within SQL Server are concerned. I plan to write another blog dedicated just for Replication choices within SQL Server as it’s a very vast and interesting topic that needs its own blog space. I would however like to mention Replication in this blog as it completes the whole picture.

There are three types of recovery models for SQL Server

- Simple

- Full

- Bulk logged

Before I explain these three recovery models I need to touch base on a concept that is generic across databases and it involves data files, log files and undo files. I am using general terms like data files, log files and undo files as the concept is quite similar across databases; the difference is in the implementation details of how databases store persistent and transactional data across these three files (I knew if I had paid attention during my data structure lectures in college days I would have a database in my name, thinking big).

Data files, log/transactional files and undo files

I am oversimplifying the explanation here, if you really want to get into the details I would suggest buying some books from the author “Thomas Kyte” just Google him, he is a guru in Oracle DB but by reading his books you will gain a lot of insight into what I am about to say in a few sentences. Each database has many background threads called worker threads that write data into one of the three files (data file, log file and/or undo file). In general, whatever is written in data files is committed/persistent information, whatever is written in undo files is information the database can use to perform undo operation and the log files also called as the transaction files hold transaction details of committed as well as uncommitted data. Let me emphasize on the “uncommitted” part of the log files, the log files basically has all the information it needs to bring the database back to a consistent state. Typically when you issue a commit statement from T-SQL or PL/SQL the information gets written into log file first and some background thread then writes it to a data file. Undo file comes into picture only for uncommitted data. But even for uncommitted data the database threads write the “undo” information in the log/transaction files --- so yes the transaction/log file also contains uncommitted data, remember this, it took me a while to understand this minute piece of information. These background threads are named differently and are database implementation specific.

Backup types (Full database backup, differential database backup and log file backups)

Before I start explaining the recovery models we need to know that SQL Server allows full database backup, incremental database backup (also referred to as differential backup) and log backups (it also provides other backup type variations, like file backup, but I am going to leave that from this blog topic as its not as relevant for the concept I am trying to explain here). The full and differential backups do not perform log file backups, they just perform data file backups where as the log backups perform log file backups only.

A full backup is needed before differential and/or log backup can be started. The full backup is referred to as the differential base (basically a starting point) for differential backup. A few rules that you need to know about these three backup types before we get into the recovery models

- A full backup is mandatory for differential as well as log backup

- A differential backup is not mandatory for a log backup but a full backup is mandatory

- During restore, you just need to apply the last successful differential backup as each differential backup contains information that was captured in the previous differential backup, you do however need to restore the full database backup (referred to as the differential base) without which the differential backup is useless

- For database restore, look for the last successful differential backup file and for transactional/log backup you need all the transactional/log files since the last full and or differential backup. NOTE: You always need a full backup as a starting point or base for differential as well as log backups.

- You can restore a database without needing a differential backup as long as you have the last full backup and all the log file backups since then (basically all the log chain intact) and that you haven’t changed the recovery model since then.

- A log backup is not supported for simple recovery model (hence I do not suggest this for production environments)

Typically I would recommend one weekly full backup and one daily differential backup with many log backups during the day with 15 minutes or 30 minutes time interval between each log backup (This is my production environment recommendation). It should be noted that how these three backups’ types are used will help us determine which recovery model we plan to use.

Recovery Models

With this understanding lets get to the recovery models. NOTE: I have left a lot of details out of the data/log/undo file explanation for simplicity reason. Just remember the rule- log/transaction file always has committed as well as uncommitted data and has enough information to recover a database (a concept that you need to digest when I speak about log shipping later on in this blog).

Simple Recovery Model

It is one of the most basic types of recovery model and should never be used beyond testing and development environment. No transaction log is backed up and the recovery is based on last full database backup or last differential backup with a differential base as the starting point.

Full Recovery Model

This is my recommended recovery model for production environments. The recovery model allows data file as well as log file backups. I am going to steal a nice picture from MSDN website which shows how to minimize work loss in a production environment and it aligns with my recommendation for the production environment

Basically this pretty picture is saying the following

Let’s talk of possible server failure scenarios

Scenario 1

Say the server fails right after transactional/log backup – t14; then you can restore the database using one of the following choices

Choice 1

Choice 2

Choice 3

Of these choices the last choice 3 is the fastest way to recover the database.

Scenario 2

Say the server fails right after transactional/log backup – t14 but there are some active transactions that are not backed up (Example, say your transaction log backup time interval window is 30 minutes and say after the t14 transaction backup your server fails in the next 20 minutes before the next transaction log time interval window – t15, which is 30 minutes apart from t14)

In this scenario all the choices mentioned in the earlier scenario can be used. Here is the slight deviation, in order to recover the active transactions that are not backed up after t14; you will have to apply another transaction log file called the tail-log.

What is tail of the log or tail-log file?

Basically after the server failure if you are able to bring up the server and the SQL server instance on the server then you can backup the current active transaction log file first using the BACKUP T-SQL command with the NORECOVERY option (more on these options for BACKUP T-SQL command later). You do this before you start the restore process. This backup is basically the tail of the transaction log after t14 transaction log backup and if you are able to back up this tail then after performing the restore using any one of the three choices mentioned above, you should just apply this tail-log in the end after successfully applying the t14 transaction log file. By doing this you will be able to restore the database to the point of failure.

If for whatever reasons you are not able to backup this tail backup file then what it means is that all the data/transaction that was active after t14 will be lost and you will not be able to restore the database to the point of failure. Hence it’s important to narrow the time-interval window between two transaction log file backups (I suggest 15 minutes or 30 minutes time interval for production environments).

Bulk logged Recovery Model

I am not going to spend much time explaining this recovery model as it’s a variation of the full recovery model and should be used only if you have many large-scale bulk operations going on in the database. Basically in such environments this recovery model tries to have a trade-off between large log files versus increased work loss exposure as bulk-logged recovery model does not log every transaction into the log file for bulk operations. This also means that you cannot have a point-in-time recovery as the complete transaction is not logged into the log file for bulk operations. It’s recommended to use bulk-logged recovery model just before large-scale bulk operations are performed and then once those operations are completed successfully, to flip the recovery model back to “full recovery model”.

BACKUP and RESTORE T-SQL command options

The BACKUP and RESTORE T-SQL command has so many options that I will be focusing on only those that are relevant to this blog. Visit MSDN for a complete list of options.

BACKUP DATABASE SAMPLE_DB TO DISK = @full_bu_fn WITH COMPRESSION

BACKUP DATABASE SAMPLE_DB TO DISK = @diff_bu_fn

WITH DIFFERENTIAL, COMPRESSION

BACKUP LOG SAMPLE_DB TO DISK = @log_bu_fn WITH COMPRESSION

The COMPRESSION option is available for SQL Server 2008 and above (refers to MSDN for the exact SQL Server editions within SQL Server 2008 that supports this option).

The RESTORE T-SQL command allows the database to be restored to the following state

The database is available for read/write. Once a RESTORE command is executed with this option it’s not possible to apply any more restores to the database beyond this recovery point. Use this as the last restore option when you are in the process of recovering a database.

Example

RESTORE DATABASE SAMPLE_DB_DR

FROM disk = @fullrestore_fn with file = 1,

MOVE 'SAMPLE' TO @mdf_full_fn, move 'SAMPLE_Log' to @ldf_full_fn, REPLACE, RECOVERY

REPLACE -- will overwrite any existing database schema named --“SAMPLE_DB_DR”.

Use this option when you are in the process of performing a database recovery but haven’t applied all the backup files yet to the database

Example

RESTORE LOG SAMPLE_DB_DR

FROM disk = @local_logrestore_fn

WITH FILE = 1, NORECOVERY

FILE – refers to the backup location within a file. If a backup file has only one backup then FILE is always “1”.

This option can be used in place of NORECOVERY option if you wish to execute select statements on the restored database while you are in the process of restoring the database. This is also used as a cold-standby option for reporting purposes (more on this when I speak on log-shipping). Basically STANDBY and NORECOVERY are mutually exclusive and the minute differences between the two are listed below

- STANDBY allows the users to connect to the database and run SELECT statements against it. NORECOVERY will not allow any SELECTS to be performed on the database. Use STANDBY if you wish to perform some reporting on the database. NOTE: You cannot create any additional tables or stored procedures in the STANDBY database; it’s in a read-only state.

- For STANDBY you need to mention a temporary UNDO filename where all the undo information is stored. Basically the reason why the NORECOVERY mode does not allow SELECT is because the database can have uncommitted data after the restore operation is performed on the database with the NORECOVERY option, with STANDBY the uncommitted data is stored in the UNDO file and the database needs this UNDO file for subsequent restores. Since the UNDO information is in the UNDO file this mode allows SELECT statements versus the NORECOVERY mode.

If you have started getting headaches with my explanation of NORECOVERY and STANDBY mode then just remember this STANDBY allows SELECTS and keeps the database in read-only state. NORECOVERY does not allow any SELECT.

Example

If you wish to restore the database in STANDBY mode with full backup file then use the following T-SQL command

RESTORE DATABASE SAMPLE_DB_DR

FROM DISK= @fullrestore_fn WITH FILE = 1,

MOVE 'SAMPLE_DB' TO @mdf_full_fn, MOVE 'SAMPLE_DB_Log' TO @ldf_full_fn, REPLACE, STANDBY = @UNDO_FILENAME

The @UNDO_FILENAME will store the uncommitted information. This file will be needed for subsequent restores. The following example shows how to restore the database in STANDBY mode by applying transaction log files.

RESTORE LOG SAMPLE_DB_DR

FROM DISK = @local_logrestore_fn

WITH FILE = 1, STANDBY = @UNDO_FILENAME

LOG SHIPPING (OR TRANSACTION LOG SHIPPING)

If you are following my blog so far then log shipping is nothing but copying the backup files (specifically the transaction log files) to the standby/backup server and applying the backups to the standby server. Log shipping is a cheap and practical alternative to replication and should be used in case if you are short on budget. The main difference between Log Shipping and Replication is that the target database is not available for read/write operations. You can have read operations in the target database in log shipping if you select the STANDBY mode option from the RESTORE T-SQL command as explained earlier, you can never get write option in the target database with log shipping. Basically log shipping provides an active-passive failover option or active-cold failover option. Replication can provide active-active failover option.SQL Server provides a task to configure log shipping via its SQL Server Management Studio. I prefer to use SQL Server Management Studio for configuring log shipping, however, if you wish to custom code your log shipping solution. Then all you have to do is the following

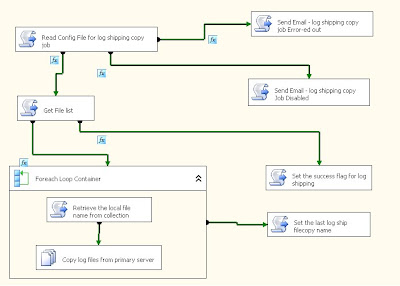

I wrote a few simple SSIS jobs which were deployed as jobs to run from the SQL Server Agent at scheduled intervals to perform log shipping.

The above figure shows the SSIS job that does the database backups (full, differential and log – NOTE: technically differential backup is not needed for log shipping). The time intervals are based on my recommendations for full recovery model which is a full backup frequency of once a week, a differential backup frequency of daily once and a transactional/log backup frequency of 15 minutes or 30 minutes apart.

The above figure shows the SSIS job that does the copy of the backup files from the primary server to the target server. I like to schedule it at intervals close to the transaction log backup interval of 15 minutes or 30 minutes window. This helps you ship/copy the log files as quickly as you can across the wire to the target server and then apply the log files on the target server. I have set this job to run at 20 minutes time interval.

The above SSIS job runs on the target server and basically applies the transaction log files on the target database. I schedule this SSIS job to run every one hour; even though this time interval is longer than the transaction log backup window or the copy transaction log file window. I am fine with this longer time interval as the copy SSIS job has already copied the transaction log files from the source/primary server to the target server directory at a shorter time interval of 20 minutes.NOTE: you do not need a SSIS job to do log shipping but I wanted to try something different so I wrote SSIS jobs. I would recommend using the SQL Server Management Studio’s log shipping task for performing log-shipping versus this elaborate method.

REPLICATION

I am not going to elaborate on replication besides explaining the three basic types as I think this topic needs its own blog and I plan to write one in the future.

The replication concept is based on subscriber and publisher model

There are basically three types of replication

It’s the simplest to configure. It’s a one way replication process which means a snapshot of the data is taken at regular intervals at the publisher and then that snapshot is replicated to all the subscribers. Replication from subscribers is not possible back to the publisher. Snapshot replication does not provide real time replication of data as the snapshot interval is what determines how stale the subscriber’s database is. Snapshot replication is suited for small volumes of data replication.

Both Merge and Transaction replication provide near real time data replication in an incremental manner. They both allow data to flow from the publisher to the subscribers as well as from the subscribers to the publishers. As the data flow can be two ways, they both provide conflict resolution options. Merge replication is better suited for updates at the subscribers end than transactional replication and is the preferred choice between the two replication types if you are expecting a lot of updates at the subscribers end. Merge replication is typically used for server to client environment. It relies on triggers to perform replication.

Transactional replication also provides two-way replication. It is however better suited for one way replication of data in near real time and is used in a server to server environment where there is a lot of updates occurring at the publisher end. Unlike triggers used for Merge replication, Transactional replication relies on SQL Server Snapshot Agent, Log Reader Agent, and Distribution Agent to perform replication

General comments

I hope you find this blog informative. SQL server provides so many options for backup and restore as well as replication that you will never be short of ideas. I haven’t even talked about the Maintenance plan wizard within SQL Sever Management Studio, something you should look into as well. It provides you with a wizard to configure certain SQL Server specific maintenance task. I hope to write another blog on replication and a similar blog for Oracle/DB2 database in the near future.